Usually, when we all start learning Machine Learning, we find a ton of information about how to build models, which of course is the core of the topic. But there’s an equally important aspect of ML that is rarely taught in the academic world of Data Science, and that is how to deploy these models. How can I share this useful thing that I’ve done with the rest of world? Because, at the end of the day…that’s the purpose of our job right? Making people’s lives easier 😊.

In this post, we’ll learn how to deploy a machine learning model to the cloud and make it available to the rest of the world as an API.

The Workflow

We’re going to first store the model in Firebase Storage to deploy it to AI Platform where we can version it and analyse it in production. Finally, we’re going to make our model available through an API with Firebase Cloud Functions.

What’s the AI Platform? 🧠

AI Platform is a service of Google Cloud Platform (GCP) that makes it easy to manage the whole production and deployment process by not having to worry about maintaining your own infrastructure and making you pay only for usage. This will enable you to scale your product massively for fast growing projects.

You can try this and many more experiments on your own FOR FREE by making use of GCP’s 12 month, $300 free trial to get you started.

What are Firebase Cloud Functions?🔥

Essentially, for the purpose of this post, the cloud function will work as an API. We will make the predictions of our model available through a link that any person can make requests to, and receive the response of our model in real time.

What you’ll need

- A model ready to share ✔

- A Google account ✔

yep, that’s all

Getting started

Just for the sake of simplicity, I’m going to assume the model was developed in Python and lives in a Jupyter Notebook. But of course, these steps can be adapted to any other environment.

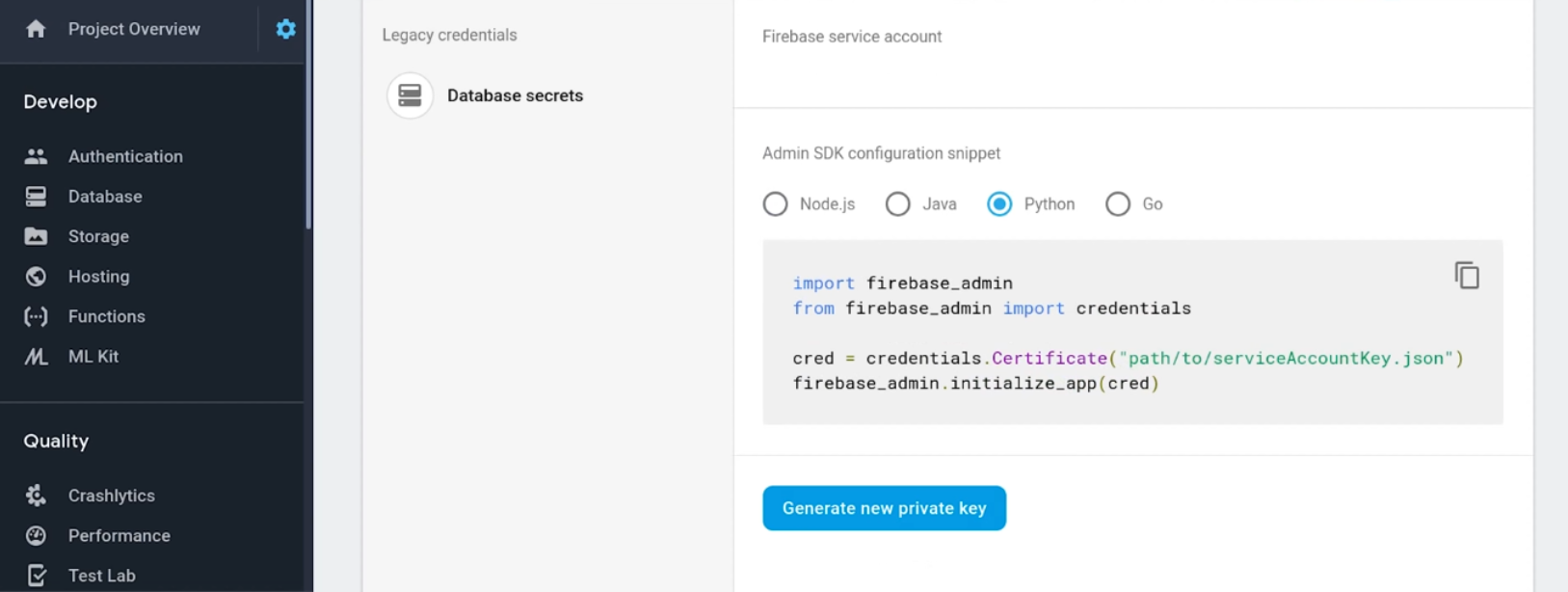

1. Sign in to Firebase

First, sign in to the Firebase Console with your Google account and create a new project. Now you’re inside the Firebase Dashboard, go to the project settings > Service accounts > Firebase Admin SDK, (in this case) you select the Python option and click on Generate new private key. This will give the JSON file of your service account that you can save in your notebook’s directory.

Then, install the Firebase Admin SDK package: pip install firebase-admin

2. Store model in Firebase Storage

Once you’ve trained and tested your model it’s ready to upload to AI Platform. But before that, we need to first export and store the model in Firebase Storage, so it can be accessed by AI Platform.

If you’re using a notebook, create a new cell at the end and add the following script. This will enable the usage of your firebase account:

import firebase_admin from firebase_admin import credentials from firebase_admin import firestore# Use a service account if (not len(firebase_admin._apps)): cred = credentials.Certificate(r'service_account.json') firebase_admin.initialize_app(cred)db = firestore.client()

Now, to run the following code you’ll need to get the Project ID, which you can find again in your Firebase project settings.

Once we have our Project ID we upload the model by running the following code (you should first change it with your Project ID).

from sklearn.externals import joblib

from firebase_admin import storagejoblib.dump(clf, 'model.joblib')

bucket = storage.bucket(name='[YOUR PROJECT ID HERE].appspot.com')

b = bucket.blob('model-v1/model.joblib')

b.upload_from_filename('model.joblib')

print('model uploaded!')

Now we can verify that the model has been correctly uploaded by checking in Firebase Storage inside the specified directory (which in our case is model-v1/).

3. Deploy model in AI Platform

Now that the model has been stored, it can be connected to AI Platform.

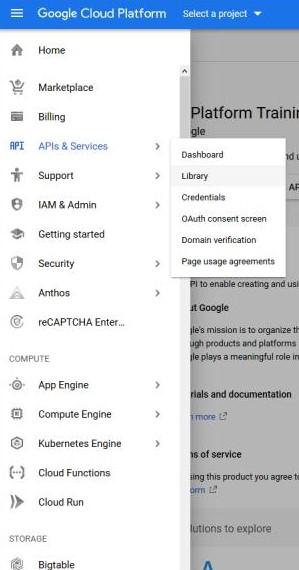

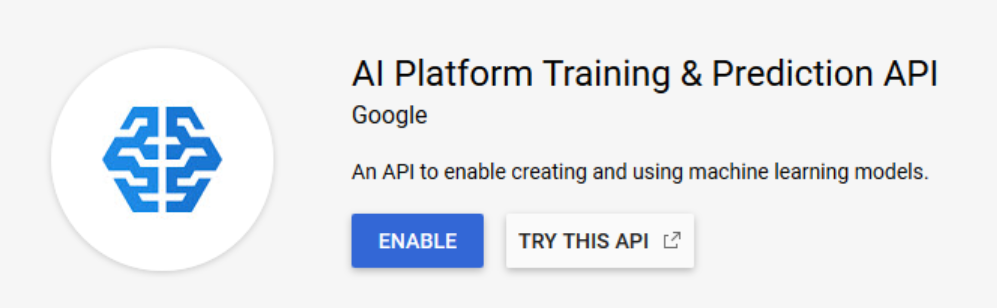

We need to enable a couple of APIs in Google Cloud Platform. On the left panel, inside the Library section, we look for the APIs “AI Platform Training & Prediction API” and “Cloud Build API” and enable them.

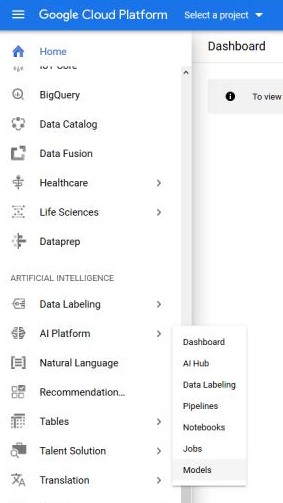

Now, on the left panel we click on AI Platform > models and we Create new model and input the corresponding information.

Once we’ve created the model it’s time to create a version of it, which will point to the .joblib file that we previously stored. We click on the model > new version and fill the information. It’s important that we choose the same Python version that we used for training the model. We choose scikit-learn as the framework. When specifying its version, we can get it by running the following code in our notebook.

import sklearn

print('The scikit-learn version is {}.'.format(sklearn.__version__))

When choosing the ML Runtime version, you should select the recommended one. The machine type can be left by default for now.

Finally, we specify the folder in which our .joblib file is located. It’s important to select the folder, not the file! The rest of the fields can be left by default and save. At that moment, an instance of our model will be deployed in AI Platform.

Now, we’ll be able to make predictions from the command line or from other Google APIs, such as Cloud Function, as we’ll see next. Additionally, we’ll be able to get some performance metrics on our model.

4. Create the Cloud Function

Let’s see how to implement the function!

We’re going to run some commands on the terminal, but for that, you’ll need to ensure you have Node.js installed in your computer. The following commands are specific for Windows but you should be able to use them in Unix and Mac OS devices by adding sudo at the beginning of each of the commands.

Let’s start by installing the Firebase client: $ npm install -g firebase-tools

We access the Google account: $ firebase login

Initialize a new project directory (make sure you’re in the directory you want to initialize it in):$ firebase init

When running this last command you’ll be asked several questions. When asked about the Firebase project that you want in the directory, you have to choose the one that contains the ML model that we previously exported. Select JavaScript as programming language. We don’t use ESLint, so answer no. And finally, answer yes to the installation of dependencies with npm.

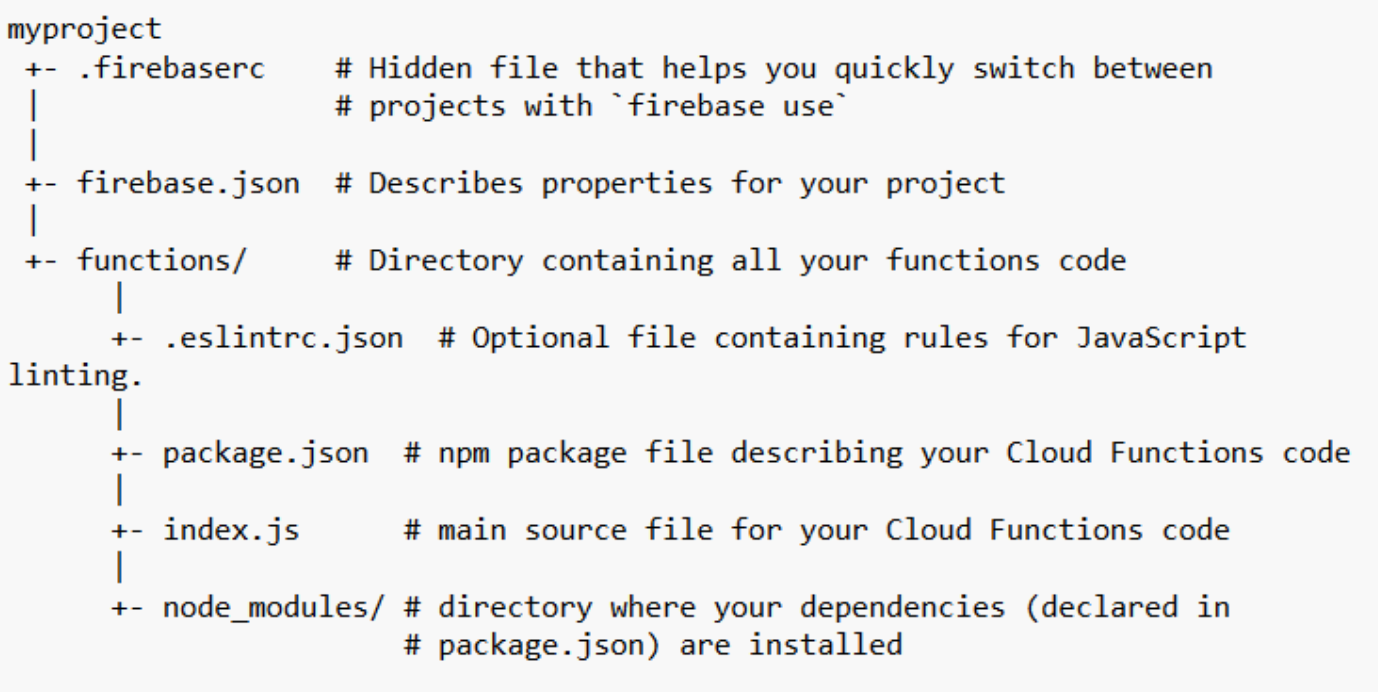

Once the project has been created, the directory will have the following structure:

Inside this directory, we’ll only modify the index.js and the package.json files.

We install the packages of the Google API: $ npm i googleapis

Now we check the packages have been installed correctly by opening the package.json file. In case you want to use any other external package in your code you should also add it in this file with its corresponding version.

For now, it should have a structure similar to this:

"dependencies": {

"firebase-admin": "~7.0.0",

"firebase-functions": "^2.3.0",

"googleapis": "^39.2.0"

}

I’ll briefly explain what they do:

- firebase-admin: It’s the Admin SDK, which allows to interact with Firebase from privileged environments.

- firebase-functions: It’s an SDK for the definition of Cloud Functions in Firebase.

- googleapis: It’s the client library Node.js for the usage of Google APIs.

Now let’s see the implementation of the function (we are editing the index.js file), which you can also find in this GitHub repository. As an example, I’ll be using the code to access a simple fake-account detection model.

We start by loading the firebase-functions and firebase-admin modules.

const functions = require('firebase-functions');

const admin = require('firebase-admin');

We load the googleapis module and add the reference to the version 1 of ml.

admin.initializeApp(functions.config().firebase);

const googleapis_1 = require("googleapis");

const ml = googleapis_1.google.ml('v1');

The requests are going to be sent to an http function.

exports.predictSPAM = functions.https.onRequest(async(request,response)=>

{

We specify the input values of the function. In this example, I’m getting some data about the social media account that my model will use to classify as fake or not. You should specify the fields that you plan to input afterwards to your model.

const account_days_old = request.body.account_days_old;

const followers_count = request.body.followers_count;

const following_count = request.body.following_count;

const publications_count = request.body.publications_count;

After that, we build the input of the model, that is, the input parameters that we’ll send to the model to get the prediction. Note that these inputs should follow the same structure (order of features) with which the model was trained.

const instance =

[[account_days_old,followers_count,following_count,publications_count]]

Now, let’s make the request to the Google API, this request needs authentication, which will connect our Firebase credentials with Google API.

const model = "[HERE THE NAME OF YOUR MODEL]";

const { credential } = await

googleapis_1.google.auth.getApplicationDefault();

After storing the name of our model in a variable (the name should be the same you gave it in the AI Platform console), we make a prediction call to AI Platform by sending our credentials, the name of the model and the instance that we want the prediction for.

const modelName = `projects/[YOUR PROJECT ID HERE]/models/${model}`;

const preds = await ml.projects.predict({

auth: credential,

name: modelName,

requestBody: {

instance

}

});

response.send(preds.data['predictions'][0]);

});

5. Deploy the Cloud Function as an API

Once we’ve created the cloud function that accesses the model, we just need to upload it to Firebase to deploy it as an API.

To upload the Firebase function we run the following command in the terminal: $ firebase deploy --only functions

Once it has finished loading, a URL is obtained through which the function will be accessible, which can be found by logging into Firestore, in the Functions section, under Request in smaller print.

And that’s all, now your model is up and running, ready to share! 🎉🎉🎉

You can make requests to this API from a mobile app, a website…it could be integrated anywhere!

6. Test your API with Insomnia

This is, of course, an optional step, if you followed the previous guidelines, your model should be ready to receive requests. However, as a programmer, I like to test things to check everything works fine.

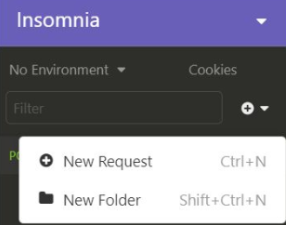

My favourite way to test APIs is by using Insomnia. Insomnia is a REST API client that lets you test your APIs easily. This free desktop app is available for Windows, MacOS and Ubuntu. Let’s check if our newly made API works properly!

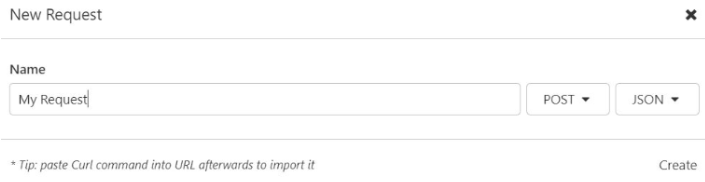

Once we’ve installed the desktop app we can create a new request.

We’ll write the request name and choose POST as a method and JSON for its structure.

Once we’ve created the request, we copy the URL of the cloud function and we paste it in the top bar.

We will now write the request following the format that we specified in the function, in my case, it’d be like this:

{

"account_days_old": 32,

"followers_count": 162,

"following_count": 152,

"publications_count": 45,

}

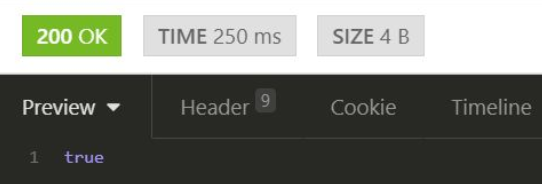

We now hit SEND and we’ll get the response, as well as the response time and its size. If there were any errors you should also receive the error code instead of the 200 OK message.

The response that you get will, of course, vary depending on your model. But if everything works fine, then congrats! You’re ready to share your model with the rest of the world! 🌍

If you made it this far, thank you for your time and I hope you got some value from this post😊

See you in the next one! 🚀